In a recent article, I wrote that capitalism, not AI, is the reason for the unethical use of generative AI Tools to exploit people.

For instance, using AI to completely generate images (which is legally not considered “art” in the United States and most of Europe, but is considered “art” in the UK and China under specific circumstances) is arguably placing some artists out of work (though this may be conjecture as many appreciators of art are inspired by the vision of the artist, rather than the imagery of the work itself).

But talk is cheap.

If I truly believe that AI can be used for good purposes, then I should find ways to ethically (as much as possible) use generative AI Tools for a positive purpose.

For the past 24 hours, I have been implementing and deploying agentic AI projects to answer user inquiries while leading them to external resources designed to spark further curiosity. Gajos and Mamykina (of Harvard and Columbia, respectively) published this 2022 article showing that extensive AI explanations for content help learners retain it. However, when they were also given recommendations, some subjects did not retain as much content, while others retained even more. Thus, finding ways to help users engage with AI while sparking their curiosity or suggesting more resources could be a tangible way to help increase users’ learning.

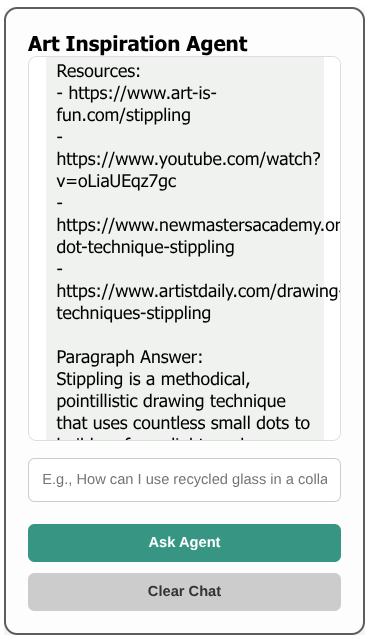

For instance, my Art Inspiration Agent project takes user inquiries, processes them using DeepSeek’s Reasoner model, and provides an output with bullet points, resource lists, and a paragraph to answer the user’s inquiry and (hopefully) encourage them to further engage with the material. The hope here was to encourage more people to just make physical art and provide clarity to help them start this process.

The following was an inquiry I made about a technique called “stippling”, which is essentially using a series of dots (by paint, charcoal, or other media) to create artwork:

As you can see, the model provided both simple explanations and helpful resources to encourage the user to learn more.

Meanwhile, this political psychology agent answers inquiries in political psychology. For instance, in the screenshot below, I prompted DeepSeek about policy proposals to mitigate political polarization online:

And again, the model provided domain-specific answers and citations to back each point.

Why does this make me happy and give me joy?

Because I have always wanted to use technology and coding to help others with a similar level of curiosity about the vast array of subjects I do. However, in times past, I simply lacked the technical skills to implement these solutions.

Because of the existence of AI coding tools like Replit and more general-use AI chatbots such as Google Gemini and ChatGPT, it is now possible for those of us with skills gaps to implement and deploy applications that are actually useful to an audience.

This makes me happier than I thought it would.

Am I worried about AI “replacing” me? In some ways, yes, but in some ways no. AI was used as the justification for my recent layoff, but AI is also what’s empowered me to build tools on my own accord.

AI has already helped so many others build their own apps, platforms, and businesses, and maybe it can help me too as I navigate this post-layoff, post-graduation, and my next graduate school journey.

But, of course, my goal here is not to promote unethical use cases for AI, but instead to show that AI can be used for learning if the user is willing to do so.

Also, providing short responses is a LOT cheaper than providing extensive details. It may help users learn much more, especially given the existing resources for any given subject matter.

Leave a comment